Raku RSS Feeds (individual feeds | subscribe to all via Atom)

Elizabeth Mattijsen (Libera: lizmat #raku) / 2026-03-07T01:29:24On behalf of the Rakudo development team, I’m very happy to announce the February 2026 release of Rakudo #190. Rakudo is an implementation of the Raku language.

The source tarball for this release is available from https://rakudo.org/files/rakudo. Pre-compiled archives will be available shortly.

The following people contributed to this release:

Will Coleda, Elizabeth Mattijsen, librasteve, David Simon Schultz, Eric Forste, Justin DeVuyst, Patrick Böker, Coleman McFarland, Daniel Green, Márton Polgár, 2colours, 4zv4l

28th German Perl/Raku Workshop (16th-18th March 2026 in Berlin)

Call for Talks is open: https://act.yapc.eu/gpw2026/talks

Elizabeth Mattijsen (lizmat) series has now expanded to 13 episodes on WHY RAKU GETS SHOUTY SOMETIMES:

From Unix Stackexchange:

~$ -:: - 'csv(in => csv(in => $IN, sep => "|"), out => $OUT);'Raku’s Text::CSV module is called at the command line, shows two nested csv(in => $IN, out => $OUT) calls.

Sample Input:1|a,b|41|c,d|41|e,f|41|g,h|41|i,j|4Sample Output:1,"a,b",41,"c,d",41,"e,f",41,"g,h",41,"i,j",4Weekly Challenge #363 is available for your merriment.

For some time, the lazy coder in me has been tempted to pick some of lizmat’s nice SHOUTY things for this section. Today my base urges have won. Here are two examples:

First, this one is just a lovely small trick I already use:

say now - BEGIN now

Second, here is a new one on me:

=begin pod

This is documentation

=end pod

CHECK {

use Pod::To::Text;

say pod2text($=pod);

}

Which produces:

This is documentationAccording to Liz’s article :

The CHECK phaser gets executed once the entire source code has been compiled into an AST. It comes both in Block and thunk flavours.

In the next language level of Raku, this will allow you to actually modify the AST before it is being turned into bytecode. But we’re not there yet.

Your contribution is welcome, please make a gist and share via the #raku channel on IRC or Discord.

Hats’ off to the core team for the new release. Really appreciate your stalwart efforts.

Please keep staying safe and healthy, and keep up the good work! Even after week 57 of hopefully only 209.

~librasteve

In this blog post (notebook) we calibrate the Heterogeneous Salvo Combat Model (HSCM), [MJ1, AAp1, AAp2], to the First World War Battle of Coronel, [Wk1]. Our goal is to exemplify the usage of the functionalities of the package “Math::SalvoCombatModeling”, [AAp1]. We closely follow the Section B of Chapter III of [MJ1]. The calibration data used in [MJ1] is taken from [TB1].

Remark: The implementation of the Raku package “Math::SalvoCombatModeling”, [AAp1], closely follows the implementation of the Wolfram Language (WL) paclet “SalvoCombatModeling”, [AAp2]. Since WL has (i) symbolic builtin computations and (ii) a mature notebook system the salvo models computation, representation, and study with WL is much more convenient.

Here we load the package:

use Math::SalvoCombatModeling;use Graph;The Battle of Coronel is a First World War naval engagement between three British ships {Good Hope, Monmouth, and Glasgow) and four German ships (Scharnhorst, Gneisenau, Leipzig, and Dresden). The battle happened on 1 November 1914, off the coast of central Chile near the city of Coronel.

The Scharnhorst and Gneisenau are the first ships to open fire at Good Hope and Monmouth; the three British ships soon afterwards return fire. Dresden and Leipzig open fire on Glasgow, driving her out of the engagement. At the end of the battle, both Good Hope and Monmouth are sunk, while Glasgow, Scharnhorst, and Gneisenau were damaged.

| Ship | Duration of fire |

|---|---|

| Good Hope | 0 |

| Monmouth | 0 |

| Glasgow | 15 |

| Scharnhorst | 28 |

| Gneisenau | 28 |

| Leipzig | 2 |

| Dresden | 2 |

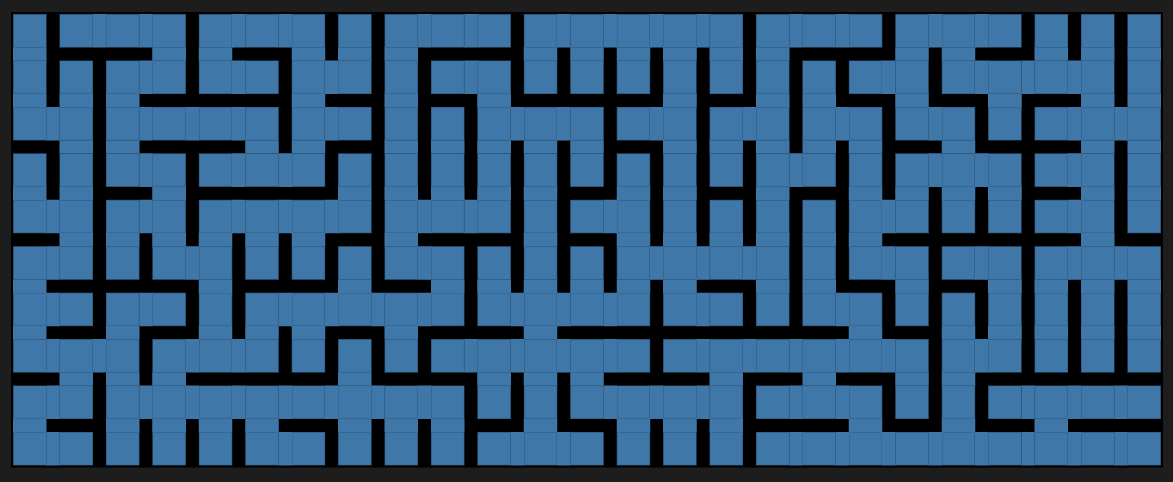

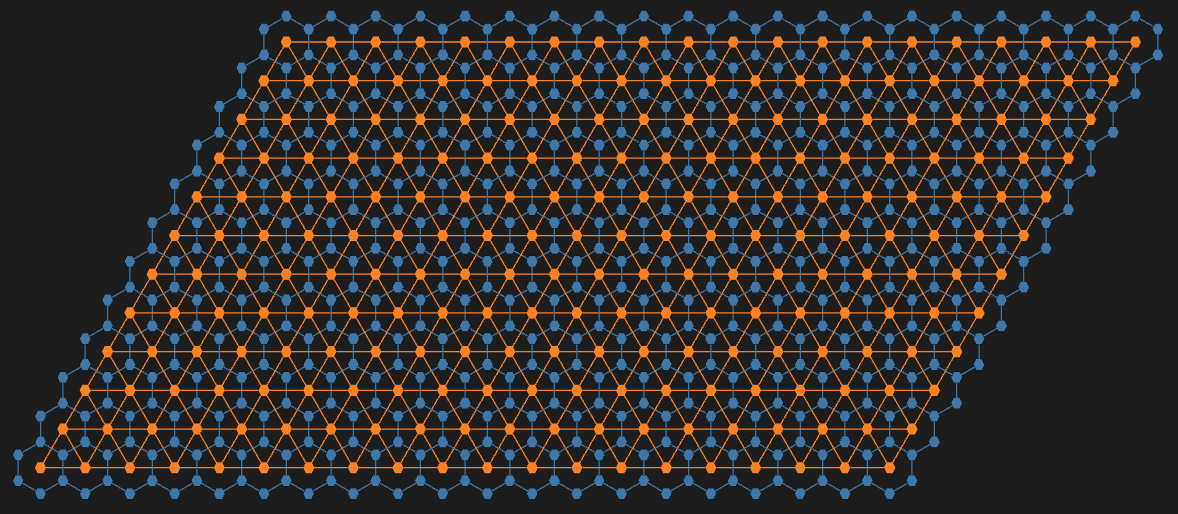

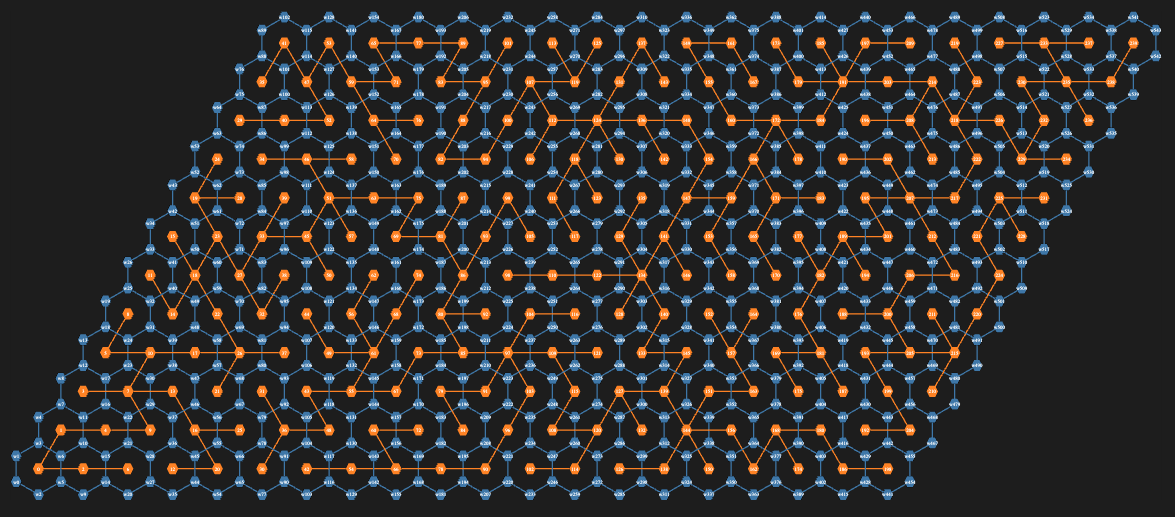

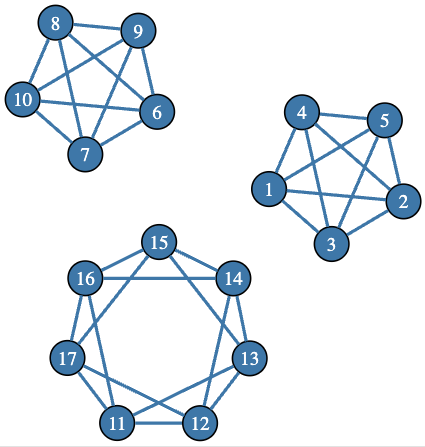

The following graph shows which ship shot at which ships and total fire duration (in minutes):

#% htmlmy @edges = { from =>'Scharnhorst', to =>'Good Hope', weight => 28 },{ from =>'Scharnhorst', to =>'Monmouth', weight => 28 },{ from =>'Gneisenau', to =>'Good Hope', weight => 28 },{ from =>'Gneisenau', to =>'Monmouth', weight => 28 },{ from =>'Leipzig', to =>'Glasgow', weight => 2 },{ from =>'Glasgow', to =>'Scharnhorst', weight => 2 },{ from =>'Glasgow', to =>'Gneisenau', weight => 15 },{ from =>'Glasgow', to =>'Leipzig', weight => 15 },{ from =>'Glasgow', to =>'Dresden', weight => 15 },{ from =>'Dresden', to =>'Glasgow', weight => 15 };my $g = Graph.new(@edges):directed;$g.dot( engine => 'neato', vertex-shape => 'ellipse', vertex-width => 0.65, :5size, :8vertex-font-size, :weights, :6edge-font-size, edge-thickness => 0.8, arrow-size => 0.6):svg;

Before going with building the model here is table that provides definitions of the fundamental notions of salvo combat modeling:

#% htmlsalvo-notion-definitions('English')==> to-html(field-names => <notion definition>, align => 'left')| notion | definition |

|---|---|

| Force | A group of naval ships that operate and fight together. |

| Unit | A unit is an individual ship in a force. |

| Salvo | A salvo is the number of shots fired as a unit of force in a discrete period of time. |

| Combat Potential | Combat Potential is a force’s total stored offensive capability of an element or force measured in number of total shots available. |

| Combat Power | Also called Striking Power, is the maximum offensive capability of an element or force per salvo, measured in the number of hitting shots that would be achieved in the absence of degrading factors. |

| Scouting Effectiveness | Scouting Effectiveness is a dimensionless degradation factor applied to a force’s combat power as a result of imperfect information. It is a number between zero and one that describes the difference between the shots delivered based on perfect knowledge of enemy composition and position and shots based on existing information [Ref. 7]. |

| Training Effectiveness | Training effectiveness is a fraction that indicates the degradation in combat power due the lack of training, motivation, or readiness. |

| Distraction Factor | Also called chaff effectiveness or seduction, is a multiplier that describes the effectiveness of an offensive weapon in the presence of distraction or other soft kill. This multiplier is a fraction, where one indicates no susceptibility/complete effectiveness and zero indicates complete susceptibility/no effectiveness. |

| Offensive Effectiveness | Offensive effectiveness is a composite term made of the product of scouting effectiveness, training effectiveness, distraction, or any other factor which represents the probability of a single salvo hitting its target. Offensive effectiveness transforms a unit’s combat potential parameter into combat power. |

| Defensive Potential | Defensive potential is a force’s total defensive capability measured in units of enemy hits eliminated independent of weapon system or operator accuracy or any other multiplicative factor. |

| Defensive Power | Defensive power is the number of missiles in an enemy salvo that a defending element or force can eliminate. |

| Defender Alertness | Defender alertness is the extent to which a defender fails to take proper defensive actions against enemy fire. This may be the result of any inattentiveness due to improper emission control procedures, readiness, or other similar factors. This multiplier is a fraction, where one indicates complete alertness and zero indicates no alertness. |

| Defensive Effectiveness | Defensive effectiveness is a composite term made of the product of training effectiveness and defender alertness. This term also applies to any value that represents the overall degradation of a force’s defensive power. |

| Staying Power | Staying power is the number of hits that a unit or force can absorb before being placed out of action. |

The British ships are in Good Hope, Monmouth, and Glasgow. They correspond to the indices 1, ,2, and 3 respectively.

["Good Hope", "Monmouth", "Glasgow"] Z=> 1..3# (Good Hope => 1 Monmouth => 2 Glasgow => 3)The German ships are Scharnhorst, Gneisenau, Leipzig, and Dresden:

["Scharnhorst", "Gneisenau", "Leipzig", "Dresden"] Z=> 1..4# (Scharnhorst => 1 Gneisenau => 2 Leipzig => 3 Dresden => 4)Remark: The Battle of Coronel is modeled with a “typical” salvo model — the ships use “continuous fire.” Hence, there are no interceptors and or, in model terms, defense terms or matrices.

Here is the model (for 3 British ships and 4 German ships):

sink my $m = heterogeneous-salvo-model(['B', 3], ['G', 4]):latex;Remove the defense matrices (i.e. make them zero):

sink $m<B><defense-matrix> = ((0 xx $m<B><defense-matrix>.head.elems).Array xx $m<B><defense-matrix>.elems).Array;sink $m<G><defense-matrix> = ((0 xx $m<G><defense-matrix>.head.elems).Array xx $m<G><defense-matrix>.elems).Array;Converting the obtained model data structure to LaTeX we get:

Setting the parameter values as in [MJ1] defining the sub param (to be passed heterogeneous-salvo-model):

multi sub param(Str:D $name, Str:D $a where * eq 'B', Str:D $b where * eq 'G', UInt:D $i, UInt:D $j) { 0 }multi sub param(Str:D $name, Str:D $a where * eq 'G', Str:D $b where * eq 'B', UInt:D $i, UInt:D $j) { given $name { when 'beta' { given ($i, $j) { when (1, 1) { 2.16 } when (1, 2) { 2.16 } when (1, 3) { 2.16 } when (2, 1) { 2.16 } when (2, 2) { 2.16 } when (2, 3) { 2.16 } when (3, 1) { 2.165 } when (3, 2) { 2.165 } when (3, 3) { 2.165 } when (4, 1) { 2.165 } when (4, 2) { 2.165 } when (4, 3) { 2.165 } } } when 'curlyepsilon' { given ($i, $j) { when (1, 1) { 0.028 } when (1, 2) { 0.028 } when (1, 3) { 0.028 } when (2, 1) { 0.028 } when (2, 2) { 0.028 } when (2, 3) { 0.028 } when (3, 1) { 0.012 } when (3, 2) { 0.012 } when (3, 3) { 0.012 } when (4, 1) { 0.012 } when (4, 2) { 0.012 } when (4, 3) { 0.012 } } } when 'capitalpsi' { given ($i, $j) { when (1, 1) { 0.5 } when (1, 2) { 0.5 } when (2, 1) { 0.5 } when (2, 2) { 0.5 } when (3, 1) { 0 } when (3, 2) { 0 } when (4, 1) { 0 } when (4, 2) { 0 } when (1, 3) { 0 } when (2, 3) { 0 } when (3, 3) { 1 } when (4, 3) { 1 } } } }}multi sub param(Str:D $name, Str:D $a where * eq 'G', UInt:D $i) { 1 }multi sub param(Str:D $name, Str:D $a where * eq 'B', UInt:D $i) { given $name { when 'zeta' { given $i { when 1 { 1.605 } when 2 { 1.605 } when 3 { 1.23 } } } }}multi sub param(Str:D $name where $name eq 'units', Str:D $a where * eq 'B', UInt:D $i) { $i }multi sub param(Str:D $name where $name eq 'units', Str:D $a where * eq 'G', UInt:D $i) { $i }# ¶mmy $m = heterogeneous-salvo-model(['B', 3], ['G', 4], :offensive-effectiveness-terms, :¶m)# {B => {defense-matrix => [[0 0 0] [0 0 0] [0 0 0]], offense-matrix => [[0.018841 0.018841 0 0] [0.018841 0.018841 0 0] [0 0 0.021122 0.021122]], units => [1 2 3]}, G => {defense-matrix => [[0 0 0 0] [0 0 0 0] [0 0 0 0] [0 0 0 0]], offense-matrix => [[0 0 0] [0 0 0] [0 0 0] [0 0 0]], units => [1 2 3 4]}}$m<B><offense-matrix># [[0.018841 0.018841 0 0] [0.018841 0.018841 0 0] [0 0 0.021122 0.021122]]my $ΔB = $m<B><offense-matrix>».sum# (0.037682 0.037682 0.042244)How many salvos to achieve total damage of Good Hope and Monmouth:

1 / $ΔB.head# 26.537698That is close to the 28 min of fire by Scharnhorst and Gneisenau at Good Hope and Monmouth.

Total damage of on Glasgow — Leipzig and Dresden fire for 2 min at Glasgow:

$ΔB.tail * 2# 0.084488[MJ1] Michael D. Johns, Steven E. Pilnick, Wayne P. Hughes, “Heterogeneous Salvo Model for the Navy After Next”, (2000), Defense Technical Information Center.

[TB1] Thomas R. Beall, “The Development of a Naval Battle Model and Its Validation Using Historical Data”, (1990), Defense Technical Information Center.

[Wk1] Wikipedia entry, Salvo combat model.

[AAp1] Anton Antonov, Math::SalvoCombatModeling, Raku package, (2026), GitHib/antononcube.

[AAp2] Anton Antonov, SalvoCombatModeling, Wolfram Language paclet, (2024), Wolfram Language Paclet Repository.

This blog post has been removed because of legal reasons by request of the author.

This is part thirteen in the "Cases of UPPER" series of blog posts, describing the Raku syntax elements that are completely in UPPERCASE.

This part will discuss the various introspection methods that you can use on objects in the Raku Programming Language. Note that in some documentation these methods are also referred to as Metamethods.

In Raku everything is an object, or can be thought of as an object. An object is an instantiation of a class (usually made by calling the new method on it). A class is represented by a so-called "type object". Such a type object in turn is an instantation of a so-called meta class. And these meta classes are themselves built out of more primitive representations.

Going this deep would most definitely be out of scope for these blog posts. But yours truly does intend to go there at some point in the future.

The WHAT method returns the type object of the given invocant. Not much else to tell about it really.

say 42.WHAT; # (Int)

say "foo".WHAT; # (Str)

say now.WHAT; # (Instant)

The HOW method returns the meta-object of the class of the given invocant. The HOW (b)acronym stands for "Higher Order Workings". It allows one to introspect the class of the invocant.

say 42.HOW.name(42); # Int

say "foo".HOW.name("foo"); # Str

say now.HOW.name(now); # Instant

Note that the invocant of the HOW method needs to be repeated in the introspection method's call as the first argument. Why? Well, this is really to be possibly compatible with future versions of Raku.

Since one is usually only interested in the introspection aspect of HOW, a shortcut method invocation was created that allows one to directly call the introspection method without needing to repeat oneself: .^:

say 42.^name; # Int

say "foo".^name; # Str

say now.^name; # Instant

Some other common introspection methods are mro (showing the base classes of the class of the value) and methods (which returns the method objects of the methods that can be called on the value):

say 42.^mro; # ((Int) (Cool) (Any) (Mu))

say 42.^methods.sort; # (ACCEPTS Bool Bridge Capture Complex...

Note that these meta-classes are classes themselves, so can have meta-methods on them called as well:

say 42.HOW.^name; # Perl6::Metamodel::ClassHOW

Yeah, there's still some legacy code that will need renaming under the hood!

The WHERE method returns the memory address of the invocant. It is of limited use in the Rakudo implementation as the memory location of an object is not guaranteed to be constant. As such, it is intended for (core) debugging only.

say 42.WHERE; # 2912024602280 (or some other number)

In the previous blog post the Scalar object was described. But the Scalar objects are nearly invisible. How can one obtain a Scalar object from a given variable? And find out its name from that?

The "secret" to that is the VAR method.

my $a;

say $a.VAR.^name; # Scalar

Each Scalar object provides at least these introspection methods: of, name, default and dynamic.

my Int $a is default(42) = 666;

say $a; # 666

say $a.VAR.of; # (Int)

say $a.VAR.name; # $a

say $a.VAR.default; # 42

say $a.VAR.dynamic; # False

The of method returns the constraint that needs to be fulfilled in order to be able to assign to the variable. The name method returns the name of the variable. The default method returns the default value (Any if none is specified).

The dynamic method returns True or False whether the variable is visible for dynamic variable lookups. This usually only returns True for variables with the * twigil.

The WHO method (for "who lives here?) is actually a bit of a misnomer. It should probably have been called OUR because it returns the Stash of the type object of the invocant. And a stash is an object that does the Associative role, and as such can be accessed as if it were a Hash. And the stash of a type object is the same namespace as our inside that package.

So for instance if you would like to know all classes that live in the IO package:

say IO.WHO.keys.sort; # (ArgFiles CatHandle Handle Notification Path Pipe Socket Spec Special)

Of course, you would know them more by their complete names such as IO::ArgFiles, IO::CatHandle, IO::Handle, etc. In fact the :: delimiter is shortcut for using WHO:

say IO.WHO<Handle>; # (Handle)

say IO::Handle; # (Handle)

And that goes even further: foo:: is just short for foo.WHO:

say IO::.keys.sort; # (ArgFiles CatHandle Handle Notification Path Pipe Socket Spec Special)

A little closer to home: how would that look in a package that you define yourself and have an our scoped variable in there:

package A {

our $foo = 42;

}

say A.WHO<$foo>; # 42

say A::<$foo>; # 42

say $A::foo; # 42

The careful reader will have noticed that

packagewas used in the example. A very simple reason:class,role,grammarare all just packages with differentHOWs. AndWHOdoesn't care why kind ofpackageit is.

The REPR method returns the name of the memory representation of the class of the invocant. For most of the objects this is "P6opaque".

This is basically the representation used by

classand its attribute specifications.

The NativeCall module provides a number or alternate memory representations, such as CStruct, CPointer and Cunion. Native arrays also have a different representation (VMArray).

say 42.REPR; # P6opaque

my int @a;

say @a.REPR; # VMArray

When a class is defined, it gets the P6opaque representation by default.

class Foo { }

say Foo.REPR; # P6opaque

Unless one is doing very deep core-ish work, how an object is represented in memory should not be of concern to you.

The DEFINITE method returns either True or False depending on whether the invocant has a concrete representation. This is almost always the same as calling the defined method. But in some cases it makes more sense in Raku to return the opposite with the defined method.

An example of this is the Failure class:

say Failure.new.DEFINITE; # True

say Failure.new.defined; # False

In general the defined method should be used. The DEFINITE method is intended to be used in very low-level (core) code. It's not all uppercase for nothing!

The reason

Failure.new.definedalways returnsFalseis to make it compatible withwith.

All of the introspection "metamethods" described in this blog post are actually parsed as macros directly generating low-level execution opcodes. This is really necessary in some cases (as otherwise information can be lost), and in other cases it's just for performance.

This doesn't mean that it's not possible to call this introspection functionality as a method: you can. For example:

my $a;

say "macro: " ~ $a.VAR.name; # macro: $a

say "method: " ~ $a."VAR"().name; # method: $a

Furthermore for consistency, the same functionality of these methods is also available as subroutines.

my $b;

say "sub: " ~ VAR($b).name; # sub: $b

So if you're more at home in imperative programming, you can do that as well!

This concludes the thirteenth episode of cases of UPPER language elements in the Raku Programming Language, the sixth episode discussing interface methods.

In this episode the following macro-like introspection methods were discussed (in alphabetical order): DEFINITE, HOW, REPR, VAR, WHAT, WHERE, WHO.

Stay tuned for the next episode!

The third meeting was held on 21 February 2026 at 19:00 UTC. Four out of five long-standing issues were closed.

Details of the discussions were minuted here.

The next meeting will be held at 7 March 2026 at 19:00 UTC (20:00 CET, 14:00 EST, 11:00 PST, 04:00 JST (22 Jan), 06:00 AEST (22 Jan)), and again at a one hour maximum. If not all of these issues have been resolved, they will be moved to a future meeting.

Elizabeth Mattijsen (lizmat) series has now swollen to 12 episodes on WHY RAKU GETS SHOUTY SOMETIMES, including the gripping Method Not Found Fallback:

Weekly Challenge #362 is available for your kicks.

This week was inspired by another of jubilatious1 one-liners: Replace the nth from end occurrence of a string … but the examples here are elaborated as regular code:

my $s = "a1 b2 c3 d4";

say ($s ~~ m:1st/\d/).Str; # → 1

say ($s ~~ m:2nd/\d/).from; # → 4

say ($s ~~ m:3rd/<[a..z]>\d/); # → 「c3」

say ($s ~~ m:nth(4)/\w/).Str; # → 2

The key feature here is the Regex Positional Adverb family, :1st, :2nd, :3rd and :nth(). See if you can work out how each line does its job to pick out the first digit, start position of the second, letter-digit pair of the third and the fourth character respectively.

See https://docs.raku.org/language/regexes#Positional_adverbs for more information.

Your contribution is welcome, please make a gist and share via the #raku channel on IRC or Discord.

New in 2026.01:

Improvements:

Fixes:

RakuAST:

Extracted from the latest Draft Changelog.

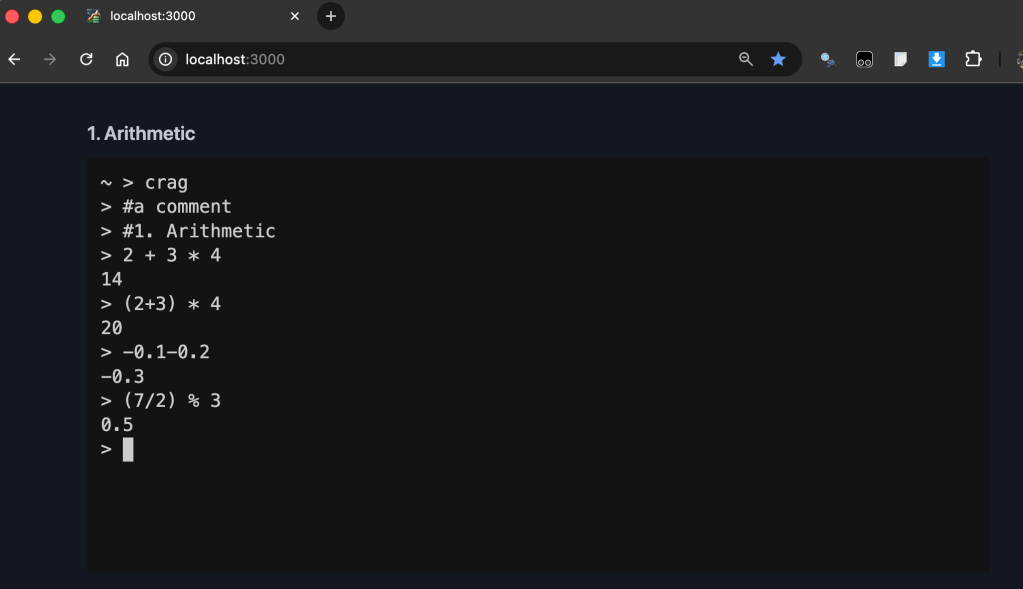

A couple of cool new modules this week. I can’t resist blowing my own trumpet and showing the synopsis of https://raku.land/zef:librasteve/Air::Plugin::Asciinema … -Ofun indeed!

#!/usr/bin/env raku

use Air::Functional :BASE;

use Air::Base;

use Air::Plugin::Asciinema;

my $site =

site :register[Air::Plugin::Asciinema.new],

page

main [

h3 '1. Arithmetic';

asciinema '/static/demos/demo1.cast';

];

;

$site.serve;

Please keep staying safe and healthy, and keep up the good work! Even after week 56 of hopefully only 209.

~librasteve

This is a third follow-up on Raku Resolutions series.

The third meeting was held on 21 February 2026 at 19:00 UTC. Apart from 3 Raku Steering Council members, only 1 other person attended. In the end, 7 issues were discussed within the allotted time (1 hour).

After some discussion, it became clear that this issue basically has gone stale in light of the new raku.org site. Richard Hainsworth will create a new issue, after which this one can be closed.

unit be too late?

After some discussion it was agreed that from a language design point of view, it shouldn't really matter when the unit occurs in the code, as long as there's only one of them. And that the rest of the source would be considered to be part of that scope.

It was agreed that the documentation will describe this future state with the caveat that this is currently not yet the case. Eric Forste agreed to make a PR accordingly, after which the issue can be closed.

The point of being able to specify an EXPORT sub before a unit would be technically correct, but not very useful in practice as an EXPORT sub usually needs to refer to objects/classes that have already been defined in the code (and thus should logically be positioned after the scope).

List

After some discussion it was decided that the current behaviour of returning Nil for List elements beyond the end is correct. And that returning a Failure for negative index values is the most consistent behaviour, especially in light of Array doing that as well. So the issue could be closed.

Consensus was that it would be nice to have all of these math / statistics methods, but that these should live in module land for the foreseeable future. So the issue could be closed.

It was recognized that there are indeed quite a few open issues in Rakudo (although much less than there have been in the past). However, there will always be open issues. And with the current size of the core team, the number of issues will not significantly reduce in the future (with the current rate of new issues coming in).

So it was decided to close the issue as "unresolvable".

.classify

It was decided that the issue has gone a bit stale, and thus ask the OP (Original Poster) whether this should stay open, especially since it was suggested that .classify / .categorize maybe should need an overhaul (at least internally).

die with newline for raising end-user errors?

After explaining the esoteric rule in Perl 5 with regards to die with a message with and without a new line, it was agreed that it would be nice to have such a feature. Especially since at least 2 of the attendees had been using a note $message; exit 1 sequence to achieve just that. Disadvantage to this is that such a sequence can not be caught by a CATCH.

After some discussion, a :no-backtrace named argument to die() was suggested, and that the issue could be closed if a Pull Requst had been created for this. This has since then been implemented in #6076, so the issue was closed.

The next meeting will be held at 7 March 2026 at 19:00 UTC (20:00 CET, 14:00 EST, 11:00 PST, 04:00 JST (22 Jan), 06:00 AEST (22 Jan)), and again at a one hour maximum. If not all of these issues have been resolved, they will be moved to a future meeting.

Since Jitsi is still working out so far, the next one will be held at the same URL: https://meet.jit.si/SpecificRosesEstablishAllegedly. The reason Jitsi was selected, is that it has proven to be working with minimal hassle for at least the Raku Steering Council meetings. As the only thing you need to be able to attend, is a modern browser, a camera, and a microphone. No further installation required.

A new set of issues has been selected by yours truly (in issue number order, oldest first):

rw parameterRat and fail on lossy coercion)without allow chaining?.raku should be replaced with a pluggable systemAny Raku Community member is welcome to these meetings. Do you consider yourself a Raku Community member? You're welcome. It's as simple as that.

But please make sure you have looked at the issues that will be discussed before attending the meeting. And if you already have any comments to make on these issues, make them with the issue beforehand.

The original contributors to these issues will also be notified (unless they muted themselves from these issues). We hope that they also will be able to attend.

If you consider yourself a Raku Community member, please try to attend! If anything, it will allow you to put faces to the names that you may be familiar with.

Hope to see you there!

This is part twelve in the "Cases of UPPER" series of blog posts, describing the Raku syntax elements that are completely in UPPERCASE.

This part will discuss the various aspects of containers in the Raku Programming Language.

This may come as a shock to some, but Raku really only knows about binding (:=) values. Assignment is syntactic sugar.

What?

Most of the scalar variables that you see in Raku, are really objects that have an attribute to which the value is bound when you assign to the variable. These objects are called Scalar containers, or just short "containers".

In short: assignment in Raku is binding to an attribute in a

Scalarobject. Grokking how containers work in Raku (as opposed to many other programming languages) is one of the rites of passage to becoming an experienced and efficient developer in Raku. It sure was a rite of passage for yours truly!

In a simplified representation (almost pseudocode) one can think of these Scalar objects like this:

class Scalar {

has $!value;

method STORE($new-value) {

$!value := $new-value

}

method FETCH() {

$!value

}

}

And an assignment such as:

my $a;

$a = 42;

can be thought of as:

my $a := Scalar.new;

$a.STORE(42);

and showing the value of a variable (as in say $a), can be thought of as:

say $a.FETCH;

In reality it's of course slightly more complicated, because there are such things as type constraints that can exist on a variable (such as my Int $a). And variables may have a default value (as in my $a is default(42)).

This type of information is kept in a so-called "container descriptor", which is considered to be an implementation detail (as in: don't depend on its functionality directly, the interface to it might change at any time).

Many shortcuts are made in implementing this, in the interest of performance. Because assignments are one of the very basic operations in a programming language. And you want those to be fast!

As a mental model, this is very useful for understanding quite a few constructs in Raku.

In Raku any value returned at the end of a block (or with the return statement) are de-containerized by default. This means that something like this:

my $a = 42;

sub foo() { $a }

foo() = 666; # Cannot modify an immutable Int (42)

will not work. What if you could return the container from a block? Then you could just assign to it! There are indeed two easy ways to return something with the container intact: the is rw trait:

my $a = 42;

sub foo() is rw { $a }

foo() = 666;

say $a; # 666

Alternately, one can use the return-rw statement.

my $a = 42;

sub foo() { return-rw $a }

foo() = 666;

say $a; # 666

This feature is for instance used if you want to assign to an array element. In part 9 of this series it was shown that the AT-POS method is what is being called by postcircumfix [ ]. In the core, both the postcircumfix [ ] operator as well as the underlying AT-POS method have this trait set for performance (as the return-rw statement has slightly more overhead).

If an array is initialized with values, it will create containers for each element and put the right value in the right place. So you cannot only fetch the values from there, but you can also assign to these containers. And it is also possible to bind such a container to another variable.

Binding to containers allows for features in Raku that are considered "magic" by some, and maybe "too magic" by others. They are part of common idioms in the Raku Programming Language.

For instance: incrementing all values in an array:

my @a = 1,2,3,4,5;

$_++ for @a;

say @a; # [2 3 4 5 6]

The topic variable $_ binds to the elements of the array in a for loop. So in each iteration it actually represents the container at that location and can thus be incremented.

People coming from other languages may think that

$_is a "reference" (or "pointer") to the actual memory location of the element in the array. This notion is incorrect in Raku. The topic variable$_is bound to the container of each element. The container object itself is completely agnostic as to where it lives. It's just a simple object that knows how toFETCHandSTORE.

Slices to arrays also return containers. For instance to increment only the first and last element in an array without knowing its size:

my @a = 1,2,3,4,5;

$_++ for @a[0, *-1];

say @a; # [2 2 3 4 6]

The slice [0, *-1] produces 2 containers (one for the first element (0) and one for the last (*-1). Only these containers get incremented, thus giving the expected result.

The same logic applies to the values in hashes.

my %h = a => 42, b => 666;

$_++ for %h.values;

say %h; # {a => 43, b => 667}

But binding containers can also be done directly in your code. A contrived example:

my $a = 42;

my $b := $a;

$b = 666;

say $a; # 666

Note that by assigning to $b, you're assiging to the container that lives in $a. Because the binding of $b to $a effectively aliased the container in $a.

In Raku it is possible to assign to elements in an array that do not exist yet.

my @a;

@a[3] = 42;

say @a; # [(Any) (Any) (Any) 42]

So there's no container yet the element at index 3. Yet it is possible to assign to it! How does that work?

The secret is really in the "container descriptor". So let's refine our pseudocode representation of the Scalar class:

class Scalar {

has $!descriptor;

has $!value;

method STORE($value) {

$!value := $!descriptor.process($value);

}

method FETCH() {

$!value

}

}

Note that storing a value has now become a little more complicated ($!descriptor.process($value) rather than just $!value). So the descriptor object's process method takes the value, does what it needs to do with it, and then returns it so that it can be bound to the attribute.

This descriptor object is typically responsible for remembering the name of the variable, the default type, perform type checking and keep a default value of a container. And any additional logic that is needed.

There are many different types of container descriptor classes in the Rakudo implementation, all starting with the

ContainerDescriptor::name. And all are considered to be implementation detail.

For instance, when an AT-POS method is called on a non-existing element in an array, a container with a special type of descriptor is created that "knows" to which Array it belongs, and at which index it should be stored. The same is true for the descriptor of the container returned by AT-KEY (as seen in part 10) which knows in which Hash it should store when assigned to, and what key should be used.

It's this special behaviour that allows arrays and hashes to really just DWIM.

These special descriptors of containers for arrays and hashes also introduce an action-at-a-distance feature that you may or may not like.

my @a;

my $b := @a[3];

say @a; # []

$b = 42;

say @a; # [(Any) (Any) (Any) 42]

Note that the element in the array was not initialized after the binding, but only after a value was assigned to $b. This behaviour was specifically implemented this way to prevent accidental auto-vivification. For example:

my %h;

say %h<a><b>:exists; # False

say %h; # {}

%h<a><b> = 42;

say %h; # {a => {b => 42}}

So even though %h<a> is considered to be an Associative because of the <b>, the :exists test will not create a Hash in %h<a>. Only after a value has been assigned does %h<a> and %h<a><b> actually spring to life.

This rather lengthy introduction / diversion was to make you aware of some of the underlying mechanics of containers. Because Raku supplies a full customizable class that allows you to create your own container logic: Proxy. And understanding what containers are about is helpful when working with that class.

Creation of such a container is quite easy: all you need to supply are a method for fetching the value, and a method for storing a value (very similar to the pseudocode representation at the start of this blog post). This is done with the named arguments FETCH and STORE. Creation of a Proxy object is usually done inside a subroutine for convenience. A contrived example:

sub answer is rw {

my $value = 42;

Proxy.new(

FETCH => method () {

$value

},

STORE => method ($new) {

say "storing $new";

$value = $new;

}

)

}

my $a := answer;

say $a; # 42

$a = 666; # storing 666

say $a; # 666

The careful reader will have noticed that the is rw attribute needs to be specified on the subroutine, otherwise the Proxy would be de-containerized on return. And that the result of calling the answer subroutine was bound (:=) instead of assigned, because assignment would also cause de-containerization and thus completely defeat the purpose of this exercise.

Because the code in the supplied methods closes over the lexical variable $value, that variable stays alive until the Proxy object is destroyed. So it offers an easy way to actually store the value for this Proxy object.

Inside the supplied methods you are completely free to put whatever code that you want. As an example how that could work in a module, the Hash::MutableKeys distribution was created. Another case of BDD (Blog Driven Development)!

You may have observed that the

Proxyobject does not allow for a descriptor. It was not considered to be needed, as you have all the flexibility you could possibly want. If you want one in yourProxyobjects, you can create one yourself.

This concludes the twelfth episode of cases of UPPER language elements in the Raku Programming Language, the fifth episode discussing interface methods.

In this episode containers were described, as well as the special Proxy class with its named arguments STORE and FETCH. This Proxy class provides a fully customizable container implementation.

Stay tuned for the next episode!

This is part eleven in the "Cases of UPPER" series of blog posts, describing the Raku syntax elements that are completely in UPPERCASE.

This part will discuss the interface method that can be provided in a class to handle calls to methods that do not actually exist in this class (or its parents).

Method dispatch in the Raku Programming Language is quite complicated, especially in the case of multi-dispatch. But the first step is really to see if there is any (multi) method with the given name.

Internally this is handled by the find_method method on the meta-object of the class (which is typically called with the .^method syntax on the class).

So for example:

# an empty class

class A { }

dd A.^find_method("foobar"); # Mu

dd A.^find_method("gist"); # proto method gist (Mu $:: |) {*}

Note that an empty class A (which by default inherits from the Any class) does not provide a method "foobar" (indicated by the Mu type object). But it does provide a gist method (indicated by the proto method gist (Mu $:: |) {*} representation of a Callable) because that is inherited from the Any class.

It's this logic that is internally used by the dispatch logic to link a method name to an actual piece of code to be executed.

If the dispatch logic can not find a method by the given name, then it will throw an X::Method::NotFound error. One could of course use a CATCH phaser to handle such cases (as seen in part 6 of this series).

But there's a better and easier way to handle method names that could not be found. That is, if you're interested in somehow making them less fatal.

If a class provides a FALLBACK method (either directly in its class, or by one of its base classes, or by ingestion of a role), then that method will be called whenever a method could not be found. The name of the method will be passed as the first argument, and all other arguments will be passed verbatim.

A contrived example in which a non-existing method returns the name of the method, but only if there were no arguments passed:

class B {

method FALLBACK($name) { $name }

}

say B.foo; # foo

say B.bar(42); # Too many positionals passed; expected 2 arguments but got 3

So is there something special about the FALLBACK method? No, its only specialty is when it is being called. So you can make it a multi method:

class C {

multi method FALLBACK($name) {

$name

}

multi method FALLBACK($name, $value) {

"$value.raku() passed to '$name'"

}

}

say C.foo; # foo

say C.bar(42); # 42 passed to 'bar'

On the other hand if you don't care about any arguments at all and just want to return Nil, you can specify the nameless capture | as the signature:

class D {

method FALLBACK(|) { Nil }

}

say D.foo; # Nil

say D.bar(42); # Nil

The Raku Programming Language allows hyphens in identifier names (usually referred to as "kebab case"). Many programmers coming from other programming languages are not really used to this: they are more comfortable with using underscores in identifiers ("Snake case").

Modern Raku programs usually use kebab case in identifiers. So it's quite common for programmers to make the mistake of using an underscore where they should have been using a hyphen. If such an error is made when writing a program, it will be a runtime error. Which can be annoying. In such a case, a FALLBACK method can be a useful thing to have if it could correct such mistakes.

This could look something like this:

class E {

method foo-bar() { "foobar" }

method FALLBACK($name, |c) {

my $corrected = $name.trans("_" => "-");

if self.^find_method($corrected, :no_fallback) -> &code {

code(self, |c)

}

else {

X::Method::NotFound.new(

:invocant(self), :method($name), :typename(self.^name)

).throw;

}

}

}

The "E" class has a method "foo-bar". And a method "FALLBACK" that takes the name of the method that was not found (and putting any additional arguments in the Capture "c").

Note that the "c" is just an idiom for the name of a capture. It could have any name, but "c" is nice and short. If you want to use a name that's more clear to you, then please do so. As long as you use the same name later on.

It then converts all occurrences of underscore to hyphen in the name and then tries to find a Callable for that name. If that is successful it will execute the code with any arguments that were given by flattening the |c capture. Otherwise it throws a "method not found" error.

The

:no_fallbackargument is needed to prevent the method lookup from producing the "FALLBACK" method if the method was not found. Otherwise the code would loop forever.

So now this code will work instead of causing an execution error.

say E.foo-bar; # foobar

say E.foo_bar; # foobar

To make this more generally usable this code could be put into a role and have that live as an installable module. But that would be extra work, wouldn't it?

Fortunately the writing of this blog post initiated the development of such a role (and associated distribution) called Method::Misspelt. How's that for BDD (Blog Driven Development)?

Note that the module introduces a few more features and optimizations, while handling keeping track of multiple classes ingesting the same role. See the internal documentation for more information.

This concludes the eleventh episode of cases of UPPER language elements in the Raku Programming Language, the fourth episode discussing interface methods.

In this episode the FALLBACK method was described, as well as some simple customizations. And a bonus module created for this blog post only.

Stay tuned for the next episode!

This is a Raku Extension for Visual Studio Code including a Language Server. Currently it provides:

Kudos to BScan for implementing this.

Although numbered headings and items were specified in RakuDoc v1 (aka POD6), they were never implemented. In RakuDoc v2 there was progress, and together with some tweaking of the numitem templates, it was easy to write the RakuDoc source.

However, when we were revising RakuDoc, Damian Conway had some extra ideas about generalising enumeration, including ideas about adding alias definitions so that the numbered block could be referenced later in the text.

Elizabeth Mattijsen (lizmat) series now boasts 10 episodes on WHY RAKU GETS SHOUTY SOMETIMES:

Who knew there was so much yelling in it?

Weekly Challenge #361 is available for your kicks.

This week, one of jubilatious1 one-liners is in the frame. This was formulated to answer this Unix & Linux Stack Exchange question: How to get infos about the route of tor to the internet and format the output?

One-liner (single-step substitution):

~$ raku -pe 's/ <-[:]>+ <( /{"." x (17 - $/.to)}/;' fileSample input:

IPAddress: 185.220.101.8Location: Brandenburg, BB, GermanyHostname: berlin01.tor-exit.artikel10.orgISP: Stiftung Erneuerbare FreiheitTorExit: trueCity: BrandenburgCountry: GermanyCountryCode: DESample output:

IPAddress........: 185.220.101.8Location.........: Brandenburg, BB, GermanyHostname.........: berlin01.tor-exit.artikel10.orgISP..............: Stiftung Erneuerbare FreiheitTorExit..........: trueCity.............: BrandenburgCountry..........: GermanyCountryCode......: DEHere are the cool Raku features on show:

See https://docs.raku.org/language/regexes for more information.

Your contribution is welcome, please make a gist and share via the #raku channel on IRC or Discord.

New in 2026.01:

Improvements:

Fixes:

RakuAST:

Extracted from the latest Draft Changelog.

Kudos to BScan for their Visual Studio Code Language Server work. Please let me know if you would like to see it on the https://raku.org/tools page (even better raise a PR on https://github.com/Raku/raku.org).

Please keep staying safe and healthy, and keep up the good work! Even after week 55 of hopefully only 209.

~librasteve

We needed a legal document with numbered Articles, and the document needed to be in MarkDown for github issues, and in html, so naturally the source should be in RakuDoc.

Although numbered headings and items were specified in RakuDoc v1 (aka POD6), they were never implemented. In RakuDoc v2 there was progress, and together with some tweaking of the numitem templates, it was easy to write the RakuDoc source.

However, when we were revising RakuDoc, Damian Conway had some extra ideas about generalising enumeration, including ideas about adding alias definitions so that the numbered block could be referenced later in the text.

Since it had been so easy to tweak the numitem templates, I thought it would be easy upgrade the specification of RakuDoc v2 to get these generalisations. Was I ever so wrong!!!! Ask a genius with decades of language design experience for a nice design and you get an effusion of ideas and extensions that make RakuDoc better than any editor I have ever used, but with a simplicity that makes it easy for a document author to understand.

Another result of the redesign is to make the underlying specification of RakuDoc much clearer, something I will cover later.

During the development process, my daughter mentioned that her friend had just finished a PhD dissertation and was complaining about how much time she needed to spend reformatting the text because the numbering kept getting out of sync. The problem with most editors is that most of the effort goes on perfecting the user-facing interface, while the underlying format is created ad hoc, and enumeration is an addition. Getting the underlying structure right will make subsequent rendering easier.

Just as the design was ending and the renderer passing most tests, I casually mentioned citations would be a good extension. The "standards" for citations number in the thousands! .oO(The Jabberwocky ) But Damian snicker-snacked his vorpal blade, reducing the monstrous tangle to something easier to use. RakuDoc v2 now has a =citation block and Q<> markup to insert quoted citations. I will cover these additions in the next blog (as in: when I get the renderer to work with the new ideas).

Back to enumerating RakuDoc, here are some examples to illustrate the new functionality.

Suppose you have a formula (or code or map or table or item in a list) and you want to number it, and then reference the number in the text? Then another formula gets added into the text before, well you would want the references to update as well.

Suppose you have tables that you want to be enumerated separately from headings? But also by preference you want them to be enumerated in sequence with headings? That is the prefix to the table enumeration is the heading number.

Suppose you want Chinese, Roman, or Bengali numbering?

AND suppose you also want the numbering to be a mix of numerals and other characters, such as brackets? This is a common requirement in legal documents.

Suppose you want Arbitrary words before after or in between the numbers? For example, Article 1., Article 2. etc.

Suppose you want to have the paragraphs numbered? Sometimes you might want the paragraphs numbered in sequence from the start of the document, and sometimes you want the numbering to restart after a new heading.

Suppose you want to have the Tables and the Formulae numbered in sequence? Or perhaps, for a section that explains some aspect of one formula, you want to number the formulae separately, but then return to the original sequence after that section?

The updated RakuDoc v2 allows for all of these possibilities, while also providing for sensible default option values.

Moreover, RakuDoc allows for custom blocks, eg. LeafletMap - a map block that exposes the marvelous Leaflet library. Now it is an automatic part of the specification that prefixing a custom block with num (numLeafletMap) will number the caption of the block.

An HTML rendering of the new RakuDoc v2 specification containing the enumerated functionality can be found at RakuDoc enumeration branch

In the specification of RakuDoc, there are discussions about directives, blocks, metaoptions, and a section on =config.

Since the syntax for a directive is almost the same as the syntax of a block, it is not immediately apparent what the difference is. As we developed the generic enumeration, it became much clearer what the difference is.

Furthermore, the older specification covered multi-level headings and items. This functionality is now extended to all blocks, so =numtable2 or =numcode3 will be numbered in parts from the previous instance of =table (the equivalent of table1) or =code. This means that we need to carefully distinguish between the block base (eg., table or code), and the block level (eg., 1, 2, 3). Together the base and the level create a blocktype. These distinctions, although implied, were not so clear in the older specification.

Let's return to the =config directive. The first parameter of =config is the name of a blocktype. Note that the num prefix is not a part of the blocktype and only indicates that the enumeration associated with the instance needs to be rendered. Consequently, the presence or absence of 'num' in the config parameter has no significance. The next =config parameters are all, and may only be, metadata options.

The function of the =config directive is to distribute the named metadata options, and their values, to the named blocktype. In addition, the same metadata option can be specified on a blocktype instance (using either the =for or =begin directives), in which case it takes precedence over the option in the config. A consequence of this paradigm is that each metadata option has to have a semantic significance in the context of a blocktype instance.

One of the design aims was to give a document author the choice of restarting the enumeration for some blocktype when another blocktype is encountered. For example, the original specfication for =numitem included the idea that whenever a sequence of =numitems was encountered, they would form an ordered set, and that if another block, such as a paragraph, was encountered, the enumeration would be restarted.

This means that the occurrence of a =head restarts the item counter. This cannot be controlled within the handler of a block. Consequently, any option affecting block counting needs to be distinct from the rendering of the blocks themselves.

After some design iterations, a new directive called =counter was introduced. A directive, as opposed to a block, affects all subsequent blocks in the RakuDoc source, within the same scope. A block, and the metadata options operating on the blocktype, only affects its immediate contents.

Further, it became clear that a block and its counter were different objects, although for simplicity they have the same name. For most needs, an author does not need to know that a counter and a block are different, except that when a new counter is needed, the difference becomes necessary. In a similar way, for many purposes it doesn't matter whether a number is an integer or a string, except when it does. Raku allows for things to become complicated when the author needs it to be.

To make it easier to experiment with RakuDoc and the enumerated functionality, I have developed a Docker image called browser-editor. It is based on an Alpine image and contains raku, Cro, and the latest version of Rakuast::RakuDoc::Render (currently not yet in the fez system).

Using podman, the image can be put into a container and run locally on Linux based systems, thus:

podman pull docker.io/finanalyst/browser-editor:latest

podman run -d -v .:/browser/publication --rm -name rb docker.io/finanalyst/browser-editor:latest

For Mac silicon, a small change is needed to indicate the platform inside the docker image is based on Linux, thus:

podman run -d -v .:/browser/publication --rm --platform linux/amd64 --name rb docker.io/finanalyst/browser-editor:latest

In both cases the container is given the arbitrary name rb, and so when it is time to stop the container, the following is sufficient:

podman stop rb

The directory that the container is started from will then linked to the container's /browser/publication directory, and changes and new RakuDoc source files will be saved in that directory.

A RakuDoc source can then be edited and the HTML rendering is created on the fly, by pointing a browser at (setting the browser URL to) localhost:3000.

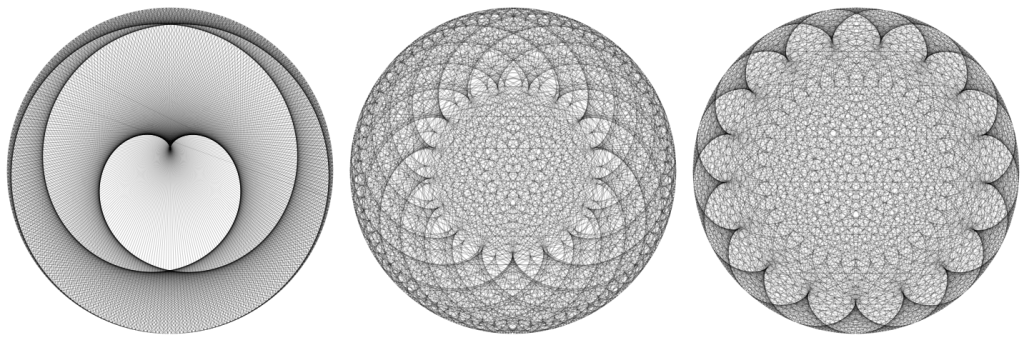

You should see something like the following in the browser:

Two frameworks exist: one needing no internet connection - a minimal single file rendering, and another that uses plugins to expose some useful third-party libraries and the more sophisticated Bulma CSS framework. The choice is toggled using the 'online' button.

Try selecting 'online' and copying in the following test source by Damian Conway to see some different effects.

=begin rakudoc

=TITLE Taster source

=numitem One

=numitem Two

=counter item :restart(42)

=numitem Three

=counter item :restart

=numitem Four

=counter item :prefix<head3>

=numitem Five

=counter item :!restart

=para ???

=numitem Six

=numpara

S<Now is the winter of our "no content"

Made glorious summer by Camelia’s bloom;

And all backlog that lour’d upon our docs

In deep commits is buried, link’d, and tagged.

Our nightly builds now wear triumphant green,

Our failing tests to passing smiles are turn’d;

Grim sighs of “ere next Yule” have chang’d to grins,

And hacker dread to blog posts boldly strung.>

=numpara

S<But I—long nurs’d on RFCs and hope,

Deform’d by specs that shifted as I read,

Unfit for idle scripts or stable sleep—

Am set, since long delays are now no more,

To ship Raku...and break the world anew.>

=numcode

$x = any <1 2 3>;

=for numcode :caption<Same thing, just labelled>

$x = any <1 2 3>;

=for numformula :caption<This means nothing!>

x^2 = y_j + \sum z_i

=for numformula :caption<This means nothing!> :alt< xH<2> = yJ<1> + E<GREEK CAPITAL LETTER SIGMA> zJ<i> >

x^2 = y_j + \sum z_i

=for numformula :alt< (1+x)H<n> = E<GREEK CAPITAL LETTER SIGMA> H<n>CJ<i> xH<i> > :caption<This is actually right>

(1+x)^n = \sum_{i=0}^n {n \choose i} x^i

=for numinput

Hey type something:

=numoutput

You typed: hsdkhldkhskdhasd

=counter numtable :restart(33456)

=begin numtable :caption<Encryption table> :form<Example %R: %C:pc>

=row

=cell A

=cell B

=cell C

=row

=cell D

=cell E

=cell F

=end numtable

=end rakudoc

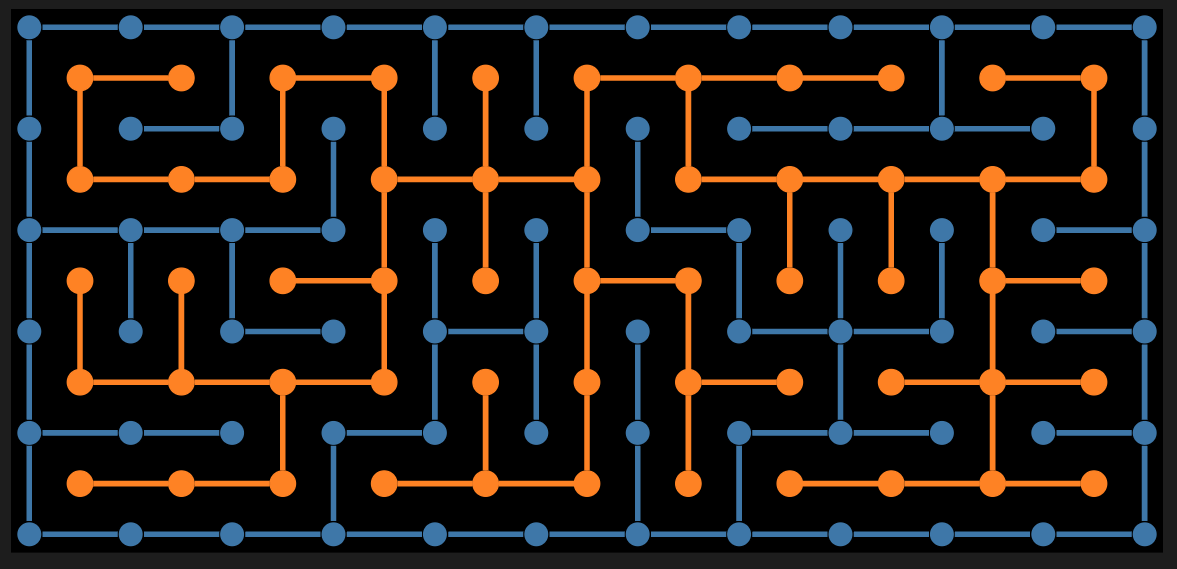

This should look like the following (or at least the top part).

The example shows some features like numbering paragraphs, code examples and tables. But you will also see the difference between the two rendering contexts. The online version has access to an online latex rendering resource, so the formula are nicely rendered, whilst the off-line version only renders to the raw formula or a character-based alternative - if provided.

Suppose we want to enumerate a code sample and then refer to it later, we can do the following:

=begin rakudoc :!toc

=TITLE Enumerations

=for numcode :numalias<SAY_EX> :lang<raku>

my %h = <one two three> Z=> ^3;

say %h;

=numoutput {one => 0, three => 2, two => 1}

=for numcode :numalias<PUT_EX> :lang<raku>

my %h = <one two three> Z=> ^3;

put %h;

=for numoutput

one 0

three 2

two 1

The difference between A<SAY_EX> and A<PUT_EX> is that C<say> and C<put> use different methods to convert the C<%h> structure into printable strings.

=end rakudoc

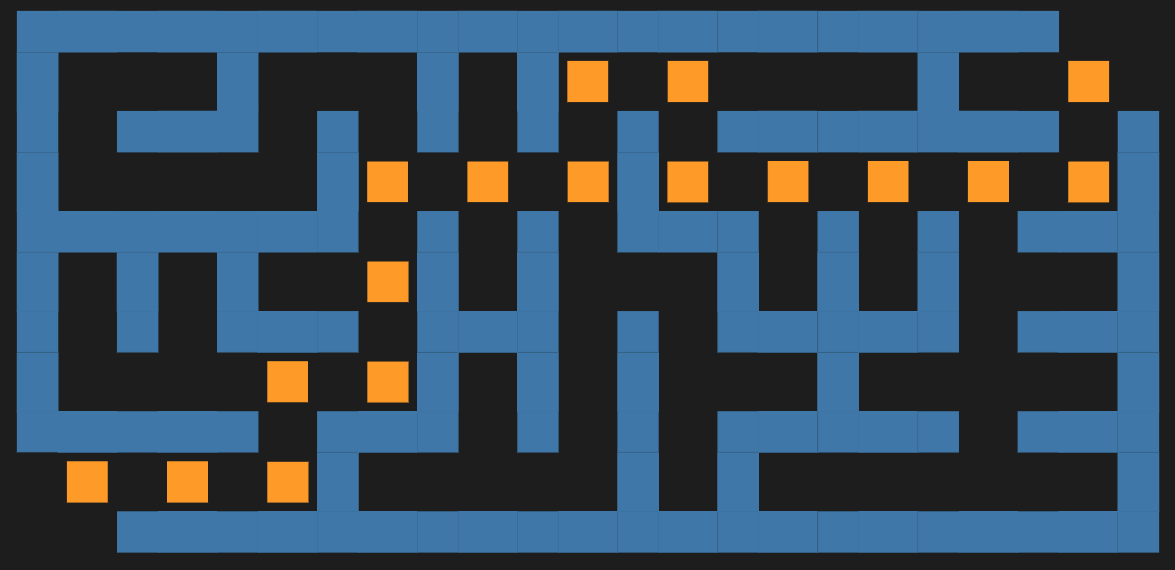

In the browser-editor image, we will get an HTML rendering something like:

The

=begin rakudoc :!tocand=end rakudocare used to top and tail the RakuDoc example above because the HTML renderer expects a complete Raku program, and a RakuDoc source (currently) needs the RakuDoc to be within a=rakudocblock. The:!tocswitches off the automatic Table of Contents that the HTML utility of theRakuast::RakuDoc::Renderdistribution generates. (Try removing:!toc)

Suppose we want out tables to have enumerations that align with the enumerations of the headings. So this means we are requiring a different sort of behaviour from the counter associated with the table block base. Consequently, we need to use the =counter directive. Also note that we are prefixing with the head2 block, which has an implicit prefix of head1.

=begin rakudoc :!toc

=TITLE Prefixes

=counter table :prefix<head>

=numhead First title

=numhead2 Sub first title

=for numtable :caption<First table>

| one | two |

=for numtable :caption<Second table>

| three | four |

=numhead Second Title

=counter table :restart(6)

=for numtable :caption<Third table>

| five | six | seven | eight |

=for numtable2 :caption<Subordinate table>

| nine | ten |

| nine | ten |

=end rakudoc

Comments:

table counter to 6 before Third table. To illustrate the multi-lingual ability of RakuDoc (and Raku in general), lets use Chinese, Roman and Bengali for multilevel headings.

=begin rakudoc :!toc

=TITLE Some non-Arabic numerals

=config head :form< %Z %D >

=config head2 :form< 「%Z」%R %D >

=config head3 :form< 「%Z」%R「%B」 %D >

=numhead First title

=numhead Second title

=numhead3 Sub-sub-head one (implies the sub-head)

=numhead3 Sub-sub-head two

=numhead2 Sub-head first explicit

=numhead2 Sub-head second explicit

=for numformula2 :form<%T %Z.%R. >

\begin{align*}

\sum_{i=1}^{k+1} i^{3}

&= \biggl(\sum_{i=1}^{n} i^{3}\biggr) + i^3\\

&= \frac{k^{2}(k+1)^{2}}{4} + (k+1)^3 \\

\end{align*}

=end rakudoc

This will produce an HTML rendering a bit like

The :numform option is very flexible and in fact the brackets in the previous example are arbitrary text. The following RakuDoc source

=begin rakudoc :!toc

=TITLE Arbitrary text in enumeration

=config head :form< Article %N. %D >

=config head2 :form< Article %N.%r. %D >

=config head3 :form< Article %N.%r「%Z」 %D >

=numhead First title

=numhead Second title

=numhead3 Sub-sub-head one (implies the sub-head)

=numhead3 Sub-sub-head two

=numhead2 Sub-head first explicit

=numhead2 Sub-head second explicit

=end rakudoc

will produce something like:

In RakuDoc, paragraphs as in all text editors, are strings of text ending in a blank line or a new block (for RakuDoc). There is no need to mark a paragraph block, but it will be equivalent to a block marked =para.

By default, each paragraph is numbered from the start of the document. In order to see the enumeration, the paragraph does need to be started with a =numpara.

For this example, we want to number paragraphs in each section marked with a heading. So we need to tell the counter which conditions it is restarted by; in this case after every =head (by default a bare block name has a level of 1). There are both :restart-after and :restart-except-after conditions, but that's all we'll say about them here.

The RakuDoc source is

=begin rakudoc :!toc

=TITLE Restarting after headings

=counter para :restart-after<head>

=head First heading

=numpara First line

=numpara Second line

=numpara Third line

=head Second heading

=numpara First line 2

=numpara Second line 2

=head2 Subordinate heading does not restart the paragraphs

=numpara Third line 2

=numpara Fourth para

=end rakudoc

The source will yield something like:

We want to number code and formulae using the same counter, but also we want to explain some formula with a special numbering that is then forgotten.

Since all =config and =counter statements are scoped, all we need to do to get the special numbering is to create a =section, which introduces a new block scope. The previous config/counter options continue, unless explicitly overridden.

However, it would not be useful if upon exiting a section, the numbering of a counter reverts to the value before the section. So there is a subtle distinction between the counter and the value of the count it contains. The counter options, such as its prefix, and when it is restarted, are scoped, but the value of the counter is not scoped. In order to reset the counter value, the counter has to be reset.

RakuDoc v2 clarified the idea of custom blocks. Previously it was implied that developers could have their own blocks, but in V.2 the difference between built in and custom blocks was clarified: custom blocks must contain a mix of upper and lower case letters (more precisely characters with the Unicode properties Lu and Ll).

The enumeration options use this idea by creating custom counters. Since this is something that managed by a block, the :counter option is provided to the appropriate blocks using a =config directive.

=begin rakudoc :!toc

=TITLE Using custom counnters

=config formula :counter< FormulaeTables >

=config table :counter< FormulaeTables >

=numhead First title

=numtable

| one | two | three |

=numtable

| more | tabula data |

| 5 | 6 |

| 7 | 8 |

=numformula E = mc^2

=numformula e^{\pi i} = -1

=begin section

=config table :counter< Derivation > :form<Aside: %T %N>

=numhead2 Some explanation

=numtable

| this | data | is | more | detailed |

| 1 | 2 | 3 | 4 | 5 |

=end section

=numhead Continuing

=numtable

| here | we | resume |

=end rakudoc

This will produce something like

We have only just completed the revised specification, and the Renderer has only just been developed. It is inevitable that there will be flaws, and the styling choices may not be liked by everyone.

Even so, we think this new upgrade of RakuDoc v2 will prove to have a much wider applicability than just a documentation aid to Raku programs.

The docker image allows anyone to experiment with the new RakuDoc functionality immediately without needing to install the whole Raku / Cro / Rakuast::RakuDoc::Render stack and some dependencies, such as Dart Sass, locally.

Dsci is a brand new kid on the cicd area. It allows use of general programming languages to create ci scenarios.

——-

Consider some imaginary case of building and deploying an application as docker container. So, logically we have two main stages:

build docker image from source code

deploy docker image using docker pull and docker run commands

Let’s see how dsci could tackle such a scenario …

——-

The main concept we start with is so called ‘pipeline file’.

It’s just yaml file containing a list of jobs to be performed. Every job is executed on isolated docker container, execution logic is always sequential - one job, by another.

This pattern yet simple however covers the majority of use cases:

jobs.yaml

jobs:

-

id: build

path: build/

-

id: deploy

path: deploy/

Thus, every element of job list is a job, where job has some unique identifier and path to location of job file.

Usually there are some conditions when and what ci scripts are triggered. Those conditions may be based on different criteria, but usually they depend on branch names.

Dsci utilizes a special syntax to define those rules. Let’s say we only want to deploy if source branch is main, and perform build for all branches:

jobs:

-

id: build

path: build/

-

id: deploy

path: deploy/

only: .<ref> eq "refs/heads/main"

Read more on job conditions on dsci documentation - https://github.com/melezhik/DSCI/blob/main/job-cond.md

To pass parameters to job, just use params: key:

jobs:

-

id: build

path: build/

params:

foo: bar

version: 0.1.0

-

id: deploy

path: deploy/

Those parameters could be thought as overrides for default ones, however dsci allows more then that - pass results ( states ) from one job to another, see later.

The next important building block of the hierarchy is so called “job file” defining main job logic.

Job file needs to be named depending on language of choice, in our example we use Bash as it usually is enough for many use cases. But using other languages is also possible. If one needs more flexibility they may chose Python or Golang

build/task.bash

version=$(config version)

tag_version=$(date +%s).$version

docker build . -t app:$tag_version

docker push repo/app:$tag_version

In this simple example our job file is just a single task job. However if there is a need to split complex job into several tasks they may do so by using job.$ext file approach. This file needs to be named according language of choice.

Let’s say we have three tasks - configure, build and test - incorporated into build job, we may run them one by one like this:

build/job.py

#!/bin/python

run_task(“configure”);

run_task(“build”);

run_task(“test”);

And if we put those tasks under build/tasks/ directory like this:

build/tasks/configure/task.bash

build/tasks/build/tasks.bash

build/tasks/tests/task.bash

In this case we have modular setup of our ci job.

The neat thing about this DSL, dsci provides the same SDK for all supported programming languages.

Read more about jobs and tasks on dsci documentation web site:

Let’s make our last job example more realistic and return docker image tag dynamically created by build job back to deploy job. We may want to do so deploy jobs know what tag to pull before deploy. All we need is to add extra line to save the job state into internal dsci cache:

build/task.bash

version=$(config version)

tag_version=$(date +%s).$version

docker build . -t app:$tag_version

docker push repo/app:$tag_version

update_state tag $tag_version

update_state() is very handy function allowing to pass states between different tasks and jobs. It’s implemented for all supported languages.

To pick up tag name in deploy job we can use already mentioned config() function:

deploy/task.bash

set -e

tag=$(config tag)

docker pull repo/app@$tag

docker stop -t 1 container || :

docker run -rm -name container -td repo/app@$tag

Read more about job and tasks states on dsci documentation ( the links above ^^ )

Let’s modify build job example with pushing image to docker registry. To do a push we need to authenticate against a docker registry first. Dsci enables simple way to pass secrets to pipelines.

This time let’s rewrite our job file on Python for convenience:

build/job.py

#!/bin/python

import os

password = os.environ['password'])

run_task(“build”, { “password”: password })

And then modify build task to handle password parameter:

version=$(config version)

tag_version=$(date +%s).$version

docker build . -t app:$tag_version

update_state tag $tag_version

echo $password | docker login --username your-username --password-stdin

docker push repo/app:$tag_version

As we can see secrets passed into pipelines as environment variables.

And unlike other task and jobs parameters they never saved in job reports or cache files.

—-

By default dsci pipelines run inside some docker container, this fits situation when one needs run purely ci code - for example build and run some unit tests. CD part comes to play when build artifacts are ready for deploy.

Dsci allows switch to deployment environment by using localhost switcher:

jobs:

-

id: build

path: build/

params:

foo: bar

version: 0.1.0

-

id: deploy

path: deploy/

localhost: true

In that case deployment occurs on VM running dsci orchestrator and which allows to restart docker container with new image version right on VM

——-

That is it.

This simple but real life example shows how easy and one can write cicd pipelines using dsci framework and how flexible it is.

Hopefully you like it.

—-

For comprehensive documentation and more information please visit dsci web site - http://deadsimpleci.sparrowhub.io/doc/README

This is part ten in the "Cases of UPPER" series of blog posts, describing the Raku syntax elements that are completely in UPPERCASE.

This part will discuss the interface methods that one can implement to provide a custom Associative interface in the Raku Programming Language. Associative access is indicated by the postcircumfix { } operator (aka the "hash indexing operator").

In a way this blog post is a cat license ("a dog licence with the word 'dog' crossed out and 'cat' written in crayon") from the previous post. But there are some subtle differences between the

Positionaland theAssociativeroles, so just changing all occurrences of "POS" in "KEY" will not cut it.

The Associative role is really just a marker, just as the Positional role. It does not enforce any methods to be provided by the consuming class. So why use it? Because it is that constraint that is being checked for any variable with a % sigil. An example:

class Foo { }

my %h := Foo;

will show an error:

Type check failed in binding; expected Associative but got Foo (Foo)

However, if we make that class ingest the Associative marker role, it works:

class Foo does Associative { }

my %h := Foo;

say %h<bar>; # (Any)

and it is even possible to call the postcircumfix { } operator on it! Although it doesn't return anything really useful.

Note that the binding operator

:=was used: otherwise it would be interpreted as initializing a hash%hwith a single value, which would complain with an "Odd number of elements found where hash initializer expected: Only saw: type object 'Foo'" execution error.

The postcircumfix { } operator performs all of the work of slicing and dicing objects that perform the Associative role, and handling all of the adverbs: :exists, :delete, :p, :kv, :k, and :v. But it is completely agnostic about how this is actually done, because all it does is calling the interface methods that are (implicitely) provided by the object, just as with the Positional role. Except the names of the interface methods are different. For instance:

say %h<bar>;

is actually doing:

say %h.AT-KEY("bar");

under the hood. So these interface methods are the ones that actually know how to work on an Hash, Map or a PseudoStash, or any other class that does the Associative role.

Let's introduce the cast of this show (the interface methods associated with the Associative role):

The AT-KEY method is the most important method of the interface methods of the Associative role: it is expected to take the argument as a key, and return the value associated with that key.

Note that the key does not need to be a string, it could be any object. It's just that the implenentation of

HashandMapwill coerce the key to a string.

The AT-KEY method should return a container if that is appropriate, which is usually the case. Which means you probably should specify is raw on the AT-KEY method if you're implementing that yourself.

say %h<bar>; # same as %h.AT-KEY("bar")

The EXISTS-KEY method is expected to take the argument as a key, and return a Bool value indicating whether that key is considered to be existing or not. This is what is being called when the :exists adverb is specified.

say %h<bar>:exists; # same as %h.EXISTS-KEY("bar")

The DELETE-KEY method is supposed to act very much like the AT-KEY method. But it is also expected to remove the key so that the EXISTS-KEY method will return False for that key in the future. This is what is being called when the :delete adverb is specified.

say %h<bar>:delete; # same as %h.DELETE-KEY("bar")

The ASSIGN-KEY method is a convenience method that may be called when assigning (=) a value to a key. It takes 2 arguments: the key and the value, and is expected to return the value. It functionally defaults to object.AT-KEY(key) = value. A typical reason for implementing this method is performance.

say %h<bar> = 42; # same as @a.ASSIGN-KEY("bar", 42)

The BIND-KEY method is a method that will be called when binding (:=) a value to a key. It takes 2 arguments: the key and the value, and is expected to return the value.

say %h<bar> := 42; # same as %h.BIND-KEY("bar", 42)

The STORE method accepts the values (as an Iterable) with which to (re-)initialize the hash, and returns the invocant.

The :INITIALIZE named argument will be passed with a True value if this is the first time the values are to be set. This is important if your data structure is supposed to be immutable: if that argument is False or not specified, it means a re-initialization is being attempted.

say %h = a => 42, b => 666; # same as %h.STORE( (a => 42, b => 666) )

Although not an uppercase named method, it is an important interface method: the keys method is expected to return the keys in the object.

my %h = a => 42, b => 666;

say %h.keys; # (a b)

Again, that's a lot to take in (especially if you didn't read the previous post)! But if it is just a simple customization you wish to do to the basic functionality of e.g. a hash, you can simply inherit from the Hash class. Here's a simple, contrived example that will return any values doubled as string:

class Hash::Twice is Hash {

method AT-KEY($key) { callsame() x 2 }

}

my %h is Hash::Twice = a => 42, b => 666;

say "$_: %h{$_}" for %h.keys;

will show:

a: 4242

b: 666666

Note that in this case the callsame function is called to get the actual value from the array.

If you want to be able to do more than just simple modifications, but for instance have an existing data structure on which you want to provide an Associative interface, it becomes a bit more complicated and potentially cumbersome.

Fortunately there are a number of modules in the ecosystem that will help you to create a consistent Associative interface for your class.

The Hash::Agnostic distribution provides a Hash::Agnostic role with all of the necessary logic for making your object act as a Hash. The only methods that must be provided, are the AT-KEY and keys methods. Your class may provide more methods for functionality or performance reasons.

The Map::Agnostic distribution provides a Map::Agnostic role with all of the necessary logic for making your object act as a Map. The only methods that you must be provided, are the AT-KEY and keys methods. As with Hash::Agnostic, your class may provide more methods for functionality or performance reasons.

A very contrived example in which a Hash::Int class is created that provides an Associative interface in which the keys can only be integer values. Under the hood it uses an array to store values:

use Hash::Agnostic;

class Hash::Int does Hash::Agnostic {

has @!values;

method AT-KEY(Int:D $index) {

@!values.AT-POS($index)

}

method keys() {

(^@!values).grep: { @!values.EXISTS-POS($_) }

}

method STORE(\values) {

my @values;

for Map.CREATE.STORE(values, :INITIALIZE) {

@values.ASSIGN-POS(.key.Int, .value)

}

@!values := @values;

}

}

and can be used like this:

my %h is Hash::Int = <42 a 666 b 137 c>;

say %h<42>;

dd %h;

which would show:

a

Hash::Int.new(42 => "a",137 => "c",666 => "b")

Again, note that the STORE method had to be provided by the class to allow for the is Hash::Int syntax to work.

If you're wondering what's happening with

Map.CREATE.STORE(values, :INITIALIZE): the initialization logic of hashes and maps allows for both separate key,value initialization, as well as key=>value initialization. And any mix of them. So this is just a quick way to use that rather specialized logic ofMap.newto create a consistentSeqofPairs with which to initialize the underlying array.Raku actually has a syntax for creating a

Hashthat only takesIntvalues as keys:my %h{Int}. This creates a so-called "object hash" with different performance characteristics to the approach taken withHash::Int.

This concludes the tenth episode of cases of UPPER language elements in the Raku Programming Language, the third episode discussing interface methods.

In this episode the AT-KEY family of methods were described, as well as some simple customizations and some handy Raku modules that can help you create a fully functional interface: Map::Agnostic and Hash::Agnostic.

Stay tuned for the next episode!

Part of traveling the world as an Anglophone involves the uncomfortable realization that everyone else is better at learning your language than people like you are at learning theirs. It’s particularly obvious in the world of programming languages, where English-derived language and syntax rules the roost.